Switches represent the features and technologies that can be used to respond to the requirements in emerging network design. Some basic operations and technologies of switches are as follows:

- Fast Convergence: Switches stipulate that the network must adapt quickly to network topology changes.

- Deterministic Paths: Switches provide desirability of a given path to a destination for certain applications or user groups.

- Deterministic Failover: Switches specify that a mechanism be in place to ensure that the network is operational all the times.

- Scalable Size and Throughput: Switches provide the infrastructure that must handle the increased traffic demands.

- Centralized Applications: Switches dictate that the centralized applications be available to support most or all users on the network.

- The New 20/80 Rule: This feature of the switches focus on the shift in traditional traffic patterns.

- Multiprotocol Support: Switches support multiprotocol environment for the campus network.

- Multicasting: Switches support IP multicast traffic in addition to IP unicast traffic for the campus network.

Before delving into the different switching technologies, the user must understand the functions performed within the different OSI reference model layers to understand how both routers and switches inter-operate and how the different switching technologies work.

The different OSI reference model layers and the functions that each layer performs are summarized below:

- Application Layer – provides communication services to applications, file and print services, application services, database services, and message services.

- Presentation Layer – presents data to the Application layer, defines frame formats, provides data encryption and decryption and compression and decompression.

- Session Layer – defines how sessions between nodes are established, maintained, and terminated and controls communication and coordinates communication between nodes.

- Transport Layer – provides end-to-end data transport services.

- Network Layer – transmits packets from the source network to the specified destination network and defines end-to-end packet delivery, end-to-end error detection, routing functions, fragmentation, packet switching, and packet sequence control.

- Data-Link Layer – translates messages into bits for the Physical layer to transmit and formats messages into data frames.

- Physical Layer – deals with the sending and the receiving of bits and with the actual physical connection between the computer and the network medium.

Data encapsulation is the process whereby the information in a protocol is wrapped within another protocol’s data section. When a layer of the OSI reference model receives data, the layer places this data behind its header and before its trailer, and thus encapsulates the higher layer’s data. In short, each layer encapsulates the layer directly over it when data moves through the protocol stack. Since the Physical layer does not use headers and trailers, no data encapsulation is performed at this layer.

Each OSI reference model layer exchanges Protocol Data Units (PDUs). The PDUs are added to the data at each OSI reference model layer.

Each layer that adds PDUs to data has a unique name for that specific protocol data unit:

- Transport layer = segment

- Network layer = packet

- Data Link layer = frame

- Physical layer = bits

The data encapsulation process is illustrated below:

- The user creates the data.

- At the Transport layer, data is changed into segments.

- At the Network layer, segments are changed into packets or datagrams and routing information is appended to the protocol data unit.

- At the Data Link layer, the packets or datagrams are changed to frames.

- At the Physical layer, the frames are changed into bits – 1s and 0s are encoded in the digital signal and are then transmitted.

Layer 2 Switching Overview

While an Ethernet switch utilizes the same logic as a transparent bridge, switches use hardware to learn addresses to make filtering and forwarding decisions. Bridges, on the other hand, utilize software running on general purpose processors. Switches provide more features and functions when compared to bridges. Switches have more physical ports as well.

The basic forward and filter logic that a switch uses is illustrated here:

- The frame is received.

- When the destination is a unicast address, the address exists in the address table, and the interface is not the same interface where the frame was received, the frame is forwarded.

- When the destination is a unicast address and the address does not exist in the address table, the frame is forwarded out on all ports.

- When the destination is a broadcast or multi-cast address, the frame is forwarded out on all ports.

Switches utilize application specific integrated circuits (ASICs) to create filter tables and to maintain these tables’ content. Because Layer 2 switches do not utilize and reference Network layer header information, they are faster than both bridges and routers. Layer 2 switching is hardware based. The Media Access Control (MAC) address of the host’s network interface cards (NICs) filters the network.

In short, switches use the frame’s hardware addresses to determine whether the frame would be forwarded or dropped. Layer 2 switching does not change the data packet, only the frame encapsulating the packet is read! This basically makes switching a faster process than the routing process.

The primary differences between bridges and Layer 2 switches are listed here:

- Bridges utilize software running on general purpose processors to make decisions, which essentially makes bridges software based. Switches use hardware to learn addresses and to make filtering and forwarding decisions.

- For each bridge, only one spanning-tree instance can exist. Switches, on the other hand, can have multiple spanning-tree instances.

- The maximum ports allowed for bridges are 16. One switch can have hundreds of ports.

A few Layer 2 switching benefits include:

- Low cost

- Hardware-based bridging

- High speed

- Wire speed

- Low latency

- Increases bandwidth for each user

There are a few limitations associated with Layer 2 switching. Broadcasts and multicasts can cause issues when the network expands. Another issue is the slow convergence time of the Spanning-Tree Protocol (SPT). Collision domains also have to be broken up correctly.

Switch Functions Associated with Layer 2 Switching

Layer 2 switching has three main functions:

- Address learning: Layer 2 switches use a MAC database known as the MAC forward/filter table to create and maintain information on which interfaces the sending devices are located on. The forward/filter table contains information on the source hardware address of each frame received. The MAC forward/filter table contains no information when a Layer 2 switch starts for the first time. When a frame is received, the switch examines the frame’s source address and adds this information to the MAC forward/filter table. Because the switch does not know where the device is located that the frame should be sent to, the switch floods the network with the frame. When a device responds by returning a frame, the switch adds the MAC address from that particular frame to the MAC forward/filter table as well. Next, the two devices establish a point-to-point connection and the frame is forwarded between the two.

- Making forwarding and filtering decisions: When a frame is received on a switch interface, the switch examines the frame’s destination hardware address then compares this address to the information contained within the MAC forward/filter table:

- If the destination hardware address is listed in the MAC forward/filter table, the frame is forwarded out the correct exit or destination interface. Bandwidth on the other network segments is preserved because the correct destination interface is used. This concept is known as frame filtering.

- If the destination hardware address is not listed in the MAC forward/filter table, the frame is flooded out all active interfaces, but not on the specific interface on which the frame was received. When a device responds by returning a frame, the switch adds the MAC address from that particular frame to the MAC forward/filter table. Next, the two devices establish a point-to- point connection and the frame is forwarded between the two.

- If a server transmits a broadcast on the LAN, the switch floods the frame out all its ports by default.

- Ensuring loop avoidance: Network loops can typically occur when there are numerous connections between switches. Multiple connections between switches are usually created to allow redundancy. To prevent network loops from occurring and to still maintain redundant links between switches, the Spanning-Tree Protocol (STP) can be used.

The common problems caused by creating redundant links between switches are listed here:

- While redundant links between routers can provide a few features, remember that frames can in fact be broadcast from all redundant links at the same time. This could possibly result in the creation of network loops.

- Because frames can be received from a number of segments simultaneously, devices can end up receiving multiple copies of the exact frame. This results in additional network overhead.

- When no loop avoidance mechanisms are used, a broadcast storm can occur. Broadcast storms occur when switches flood broadcasts continuously all over the internetwork.

- The user could end up with multiple loops generating all over an internetwork – loops are being created within other loops! This could result in no switching being performed on the network.

- The MAC forward/filter table’s data could become ineffective when switches can receive a frame from multiple links. This is typically due to the table not being able to establish the device’s location.

- Switches can also end up continuously adding source hardware address information to the MAC forward/filter table, to the point that the switch no longer sends frames.

Routing Overview

While routers and Layer 3 switches can be considered similar in concept, their design differs. Before discussing Layer 3 switching, some important factors on routing and routers will e summarized.

Routers operate at the Network layer of the OSI reference model to route data to remote destination networks. Routers use Layer 3 headers and logic to route packets. Routers use Routing table information that contains information on how the remote destination networks can be reached to make routing decisions. Cisco routers maintain a Routing table for each network protocol. Using routers can be considered a better option than using bridges. While bridges filter by MAC address, routers filter by IP address. Bridges forward a packet to all segments that it is connected to. Routers, on the other hand, only forward the packet to the particular network segment that the packet is intended for. The default configuration is that the router does not forward broadcasts and multicast frames.

A few benefits of routing are listed here:

- Routers do not forward broadcasts and multicasts, thereby decreasing the impact of broadcasts/multicasts. Routers tend to contain broadcasts to localized broadcast domains. They do not forward broadcasts like switches and bridges do.

- Routers perform optimal path determination. Each packet is checked and the router only forwards the packet to the particular network segment for which it is intended.

- The routing protocol configured on the router, path metrics, source service access points (SSAPs), and destination service access points (DSAPs) are utilized to make these informed routing decisions.

- Routers provide traffic management and security.

- Logical Layer 3 addressing is another benefit.

Layer 3 Switching Overview

As mentioned previously, the main difference between Layer 3 switches and routers is the physical design. Other than this, routers and Layer 3 switches perform similar functions. Layer 3 switches can be placed anywhere in the network to process high performance LAN traffic. In fact, Layer 3 switches can replace routers.

The main functions of Layer 3 switches are listed here:

- Use logical addressing to determine the paths to destination networks.

- Layer 3 switches can provide security.

- Layer 3 switches can also be used to add Management Information Base (MIB) information to Simple Network Management Protocol (SNMP) managers.

- Layer 3 switches can use Time to Live (TTL).

- They can respond to and process any option information.

A few benefits of Layer 3 switching include:

- High performance packet switching.

- Hardware based packet forwarding occurs.

- High speed scalability.

- Low cost and low latency.

- Provides security.

- Flow accounting capabilities.

- Quality of service (QoS).

Layer 4 Switching Overview

Layer 4 switching is a Layer 3 hardware based switching technology that can also provide routing over Layer 3. Layer 4 switching works by taking into account the application that was used. Layer 4 switching examines the port numbers contained within the Transport layer header to make routing decisions. The ports in the Transport layer header pertain to the upper layer protocol or application and are defined in Request for Comments (RFC) 1700.

The characteristics of Layer 4 switching are listed here:

- A Layer 4 switch has to maintain a larger filter table than the table Layer 3 and Layer 2 switches maintain.

- A Layer 4 switch can be configured to prioritize certain data traffic based on application. This basically allows users to specify QoS for users.

Multi-Layer Switching (MLS) Overview

Multi-layer switching (MLS) is the terminology used to describe the technology whereby Layer 2, Layer 3, and Layer 4 switching technologies are combined. Multi-layer switching (MLS) works on the concept of routing one and switching many.

Multi-layer switching can make routing decisions with the following:

- The MAC source and MAC destination address within a Data Link frame.

- The IP source address and IP destination address within the Network layer header.

- The protocol defined within the Network layer.

- The port source number and port destination number specified in the Transport layer header.

The features of Multi-Layer Switching (MLS) are summarized below:

- High speed scalability

- Low latency

- Provide Layer 3 routing

- Transport traffic at wire speed

To read the information in the packet header, the Cisco Catalyst switches need certain hardware:

- To obtain packet header information and cache the information, Catalyst 5000 switches need the NetFlow Feature Card (NFFC).

- To obtain packet header information and cache the information, Catalyst 6000 switches need the Multilayer Switch Feature Card (MSFC) and the Policy Feature Card (PFC).

The Cisco MLS implementation requires te following components:

- Multilayer Switching Switch Engine (MLS-SE) – this is a switch that deals with moving and rewriting the packets.

- Multilayer Switching Route Processor (MLS-RP) – an MLS capable router or an RSM, RSFC, MSFC that transmits MLS configuration information and updates.

- Multilayer Switching Protocol (MLSP) – the protocol that operates between the MLS-SE and MLS-RP to enable multilayer switching.

Cisco Catalyst Switches Overview

To better understand the capabilities and features that the Cisco Catalyst switches provide, users have to understand how the Cisco hierarchical model works. Specific Catalyst switches are suited for specific layers of the Cisco hierarchical model.

To meet the requirements of a scalable network design and alleviate network congestion, Cisco recommends a network design structure that maintains a scalable, reliable, hierarchical, and cost effective network design. The primary objective of the Cisco hierarchical model network design is, through its different layers, to prevent unnecessary traffic from passing through to the upper layers. Traffic that is considered applicable or relevant should only be passed to the network. This logic results in a reduction of network congestion, which means that that network can scale better.

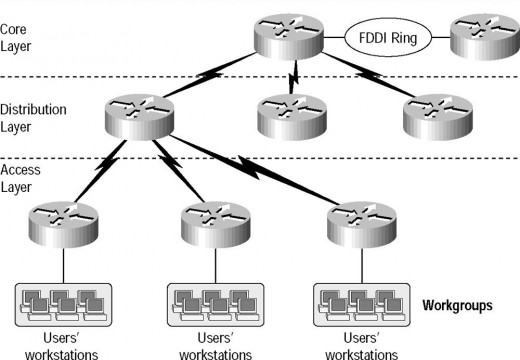

Cisco defines the following layers within the Cisco hierarchical model. Each layer has specific functions and responsibilities associated with it:

- Core Layer – transports large volumes of traffic reliably and switches traffic as fast as it can. Ensure that the core has minimal latency.

- Distribution Layer – the communication mechanism between the Access layer and the Core layer of the Cisco hierarchical model. The Distribution Layer determines how packets access the Core layer, provides filtering and routing, and determines access over the campus backbone by filtering out resource updates that are not needed.

- Access Layer – controls user access to network resources. Layer 3 devices such as routers ensure that local server traffic does not move to the wider network.

The Cisco switches are designed to fall within the Cisco hierarchical model:

- Access Layer switches: These switches provide connectivity between desktop devices and the internetwork. The Cisco Catalyst switches for the Access layer are listed here:

- 1900/2800 – provides switched 10Mbps to the desktop/10BaseT hubs in small/medium campus networks.

- 2900 – provides 10/100Mbps switched access to a maximum of 50 users and gigabit speeds for servers.

- 4000 – provides a 10/100/1000Mbps access to a maximum of 96 users and a maximum of 36 Gigabit Ethernet ports for servers.

- 5000/5500 – supports 100/1000Mbps Ethernet switching and provides access for over 250 users.

- Distribution Layer switches: These switches must be capable of processing traffic from the Access layer devices, deal with a route processor, and provide multi-layer switching (MLS) support. The Cisco Catalyst switches for the Distribution layer are listed here:

- 5000/5500 – supports a considerable number of connections and the Route Switch Module (RSM) processor module.

- 2926G – a strong switch that utilizes an external router processor.

- 6000 – provides 384 10/100 Ethernet connections, 192 100FX FastEthernet connections, and 130 Gigabit Ethernet ports.

- Core Layer switches: These switches must be capable of switching traffic quickly. The Cisco Catalyst switches for the Core layer are listed here:

- 5000/5500 – the 550 is the ideal Core layer switch, while the 5000 is the ideal Distribution layer switch. The 5000 series switches utilize the identical modules and cards.

- 6500 – these series switches can provide gigabit port density, multi-layer switching, and high availability for the Core layer.

- 8500 – provides high performance switching for the Core layer. Application-Specific Integrated Circuits (ASICs) provide multiple layer protocol support. This includes bridging, Asynchronous Transfer Mode (ATM) switching, Internet Protocol (IP), IP multicast, and Quality of Service (QoS).

Understanding LAN Switch Types

LAN switching uses hardware destination as the basis to forward and filter frames. LAN switch types determine the way in which a frame gets processed when the frame arrives at a switch port. The LAN switch type or switching mode that is elected has a direct impact on latency. Each LAN switch type has its own advantages and disadvantages associated with it.

The different LAN switch types are listed and explained here:

- Cut-Through (Real-Time) mode: In cut-through or real-time mode, the switch waits until the destination hardware address is received, then checks the MAC filter table to determine whether the destination hardware address is listed in it. The frame is therefore forwarded as soon as the switch reads the destination address and is able to determine the outgoing interface on which the frame should be forwarded. At this point, the frame is immediately forwarded. When a frame is received, the switch copies the destination address to its onboard buffers. The fact that the frame is forwarded as soon as the destination address is read and the outgoing interface is determined, results in decreasing latency.There are some switches that can be configured to perform cut-through switching on a per port basis, to the point that a user defined/specified error threshold is attained. When this threshold is attained, change over to store-and-forward mode occurs. In this way, the ports are stopped from forwarding the errors. The port returns to cut- through mode when the port once again falls beneath the error threshold.

- FragmentFree (Modified Cut-Through) mode: In FragmentFree or Modified cut-through mode, the switch first checks the initial 64 bytes of a frame for fragmentation before the frame is forwarded. This is done to avoid possible collisions. FragmentFree or Modified cut-through mode is the default LAN switch type mode used for the Catalyst 1900 switch. FragmentFree switching can be considered a modified version of the cut-through switching mode. The 64 byte collision window is waited for because at this point, it will be known whether a packet has errors. When compared with the cut-through switching mode, FragmentFree switching mode provides enhanced error checking, while at the same time not increasing latency.

- Store-and-Forward mode: In Store-and-forward mode, the switch first performs the following functions before the frame is forwarded:

- It waits until the entire data frame is received.

- The entire data frame is copied onto its onboard buffers.

- The switch next calculates the cyclic redundancy check (CRC).

- The frame is dropped if the following statements are true:

- The frame contains a cyclic redundancy check error.

- The frame is less than 64 bytes in length.

- The frame is over 1,518 bytes in length.

- When the frame contains no errors, the switch checks the MAC filter table for the destination address, determines which outgoing interface to use, then forwards the frame.

The Catalyst 5000 series switches use Store-and-forwardmode.

Follow Us!